Researchers use artificial intelligence to hunt down human intelligence

[ad_1]

(Nanowerk NewsThe brain is a wonderful and mysterious thing: three pounds of gelatinous soft tissue through which we interact with the world, generate ideas, and construct meaning and representation. Understanding where and how this occurs has long been one of the fundamental goals of neuroscience.

In recent years researchers have turned to artificial intelligence to understand brain activity as measured by fMRI, tweaking AI models of the data in an attempt to understand, with increased specificity, what people think and what those thoughts look like in their brains. The interdisciplinary team at UC Santa Barbara is among those pushing the boundaries, with methods that apply deep learning to fMRI data to create complex reconstructions of what research subjects see.

“There have been several projects trying to translate fMRI signals into images, especially as neuroscientists want to understand how the brain processes visual information,” said Sikun Lin, lead author of a paper that appeared at the recent NeurIPS conference in November 2022 (“Mind Reader: Reconstructing complex pictures of brain activity”).

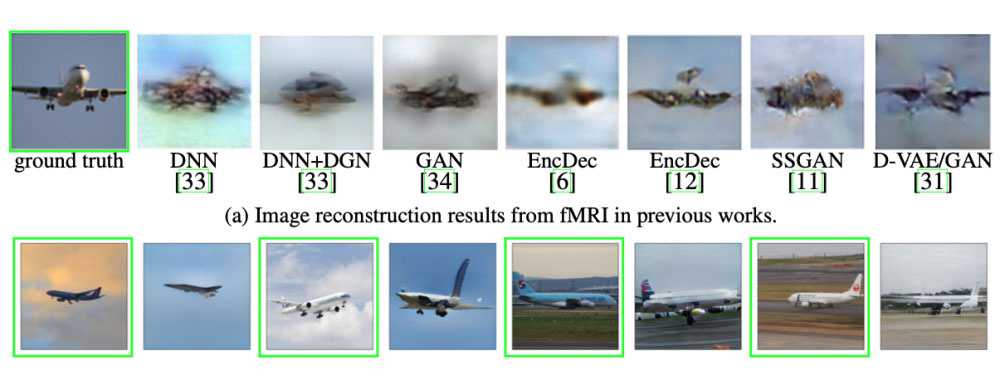

According to Lin, UCSB computer science professor Ambuj Singh and cognitive neuroscientist Thomas Sprague, the images produced by this study are photorealistic and accurately reflect genuine “ground truth” images. They noted that the previous reconstructions did not create images with the same level of fidelity.

Key to their approach is that in addition to images, layers of information are added through textual descriptions, a move Lin says was made to add data to train their deep learning model. Building on a publicly available data set, they used CLIP (Contrastive Language-Image Pre-training) to encode high-quality objective text descriptions paired with the observed images, then mapped the fMRI data from the observed images onto the CLIP space. From there they use the output of the mapping model as a condition for training a generative model to reconstruct the image.

The resulting reconstruction closely resembles the original image the subject saw — closer, in fact, than previous attempts to reconstruct images from fMRI data. The studies that have followed, including the well-known (“High-resolution image reconstruction with a latent diffusion model of human brain activity”) from Japan, has outlined a method for efficiently manipulating limited data into clear images.

What’s more, the research sheds light on an important aspect of human intelligence: semantics.

“One of the main points of this paper is that visual processing is inherently semantic,” said Lin. According to the paper, “the brain is naturally multimodal,” that is, we use several modes of information at different levels to derive meaning from a visual scene, such as what stands out, or the relationships between objects in a scene. “Using visual representations alone might make it more difficult to reconstruct an image,” continues Lin, “but using a semantic representation such as CLIP which incorporates text such as an image description, is more coherent with the way the brain processes information.”

“The science in this case is whether the structure of the model can tell you something about how the brain works,” Singh added. “And that’s what we wanted to try to find.” In other experiments, for example, researchers found that fMRI brain signals encoded a lot of redundant information — even after covering more than 80% of fMRI signals, the resulting 10–20% contained enough data to reconstruct an image. in the same category as the original images, although they do not incorporate any image information into the signal reconstruction channel (they only work from fMRI data).

“This work represents a true paradigm shift in the accuracy and clarity of image reconstruction methods,” said Sprague. “Previous work focused on very simple stimuli, because our modeling approach is much simpler. Now, with this new image reconstruction method, we can advance the experiments we are doing in cognitive computational neuroscience to use naturalistic and realistic stimuli without compromising our ability to come up with clear conclusions.”

Today, the reconstruction of brain data into “real” images continues to be labor intensive and beyond the reach of ordinary use, not to mention the fact that each model is specific to the person whose brain generated the fMRI data. But that hasn’t stopped researchers from pondering the implications of being able to decode what a person is thinking, down to the layers of meaning that are very specific to each thought.

“What I find interesting about this project is whether it’s possible to maintain a person’s cognitive state, and see how this condition uniquely defines them,” said Singh. According to Sprague, this method will allow neuroscientists to carry out further studies that measure how the brain changes its representation of stimuli – including in powerful and complex scene representations – across changing tasks. “This is a critical development that will address fundamental questions about how the brain represents information during dynamic cognitive tasks, including those requiring attention, memory and decision making,” he said.

One of the areas they are exploring now is figuring out what and how much is shared between brains so that AI models can be built without having to start from scratch every time.

“The basic idea is that human brains in many subjects share some hidden latent similarities,” said Christos Zangos, research doctoral student in Singh’s lab. “And based on that, I’m currently working on the exact same framework, but I’m trying to practice with different dataset partitions to see how far, using small amounts of data, we can model for new subjects.”

[ad_2]

Source link